Contextual anchoring is the cleanest way to get predictable, useful results from an AI. Instead of asking an open question and hoping for a usable answer, you give the model a concise frame; who it is, the situation it’s in, and the shape of the output you want. That small upfront structure changes everything.

Do it well and the model writes in the right voice, hits the right level of detail, and returns fewer hallucinations. Do it carelessly and you lock the model into a narrow view or introduce hidden assumptions. The trick is learning which anchors help and which ones hurt.

This post is a practical guide. I’ll show what anchors are, why they change model behavior, how to write them, how to test them, and most importantly, real, copy-paste prompt examples you can use.

Key takeaways:

- Contextual anchors = Role + Scenario + Output format. These three elements give the model the interpretive frame to produce targeted responses.

- Anchors raise precision and consistency but can also create blind spots; always treat anchors as testable design elements, not magic.

- Use short, front-loaded anchors (place them at the top of your prompt) and require the model to state assumptions to reduce overconfidence.

- Test anchors systematically: neutral vs. anchored vs. counter-anchored runs reveal sensitivity and robustness.

- Operationalize anchors in product flows via template libraries, versioning, and audits.

What is Contextual Anchoring

Contextual anchoring in prompt writing is simple: give the model a compact interpretation frame before asking it to do work. The frame typically contains three parts:

- Role: who the AI should be (e.g., “You are a senior UX researcher”).

- Scenario: the situation, audience, or constraints (e.g., “preparing a 10-minute briefing for executives”).

- Output format: the expected structure and tone (e.g., “three headings with short paragraphs and one recommendation”).

Together, these act like a lens. They steer the model toward a subset of its learned patterns that match your purpose. That steering reduces noise (irrelevant content) and increases the chance the output is ready to use.

The Mechanics of Contextual Anchoring in Plain Terms

LLMs are statistical continuers: they predict what comes next based on the tokens already present. Put relevant context at the start, and the model’s next-token probabilities shift toward completions that match that context.

Two things follow:

- Higher relevance: the model is more likely to select phrasing and facts that match the role and scenario you gave.

- Lower variance: repeated runs under the same anchor produce more consistent outputs.

But anchors are not neutral. They change the model’s inductive bias. That’s why a well-chosen anchor helps and a poorly chosen or hidden anchor harms.

How to Craft a High-Quality Anchor (step-by-step)

Use this lightweight recipe whenever you write a prompt meant to be reliable and repeatable.

- Define the goal first. If you can’t state what you need in one sentence, refine until you can.

- Pick a precise role. Use a recognizable title that implies scope and responsibility (e.g., “medical reviewer,” “product operations lead,” “senior developer”). Avoid vague directives like “be helpful.”

- Set the scenario. Include audience, constraints, and context that affect content (time, budget, format). Keep it to one or two crisp sentences.

- Specify the output format. Length, structure, bullet vs. prose, number of examples, and style tone. The clearer, the better.

- Ask for an assumptions list. Require the model to state what it assumes before producing the main answer. This reduces overconfidence and surfaces hidden anchors.

- Add guardrails for risky domains. If the output affects people, require citations, refusal rules, or verification steps.

Place this entire anchor at the beginning of your prompt so it conditions the model’s response.

Prompt Examples

Below are practical, ready-to-use anchors across common tasks. Use them as templates in your projects.

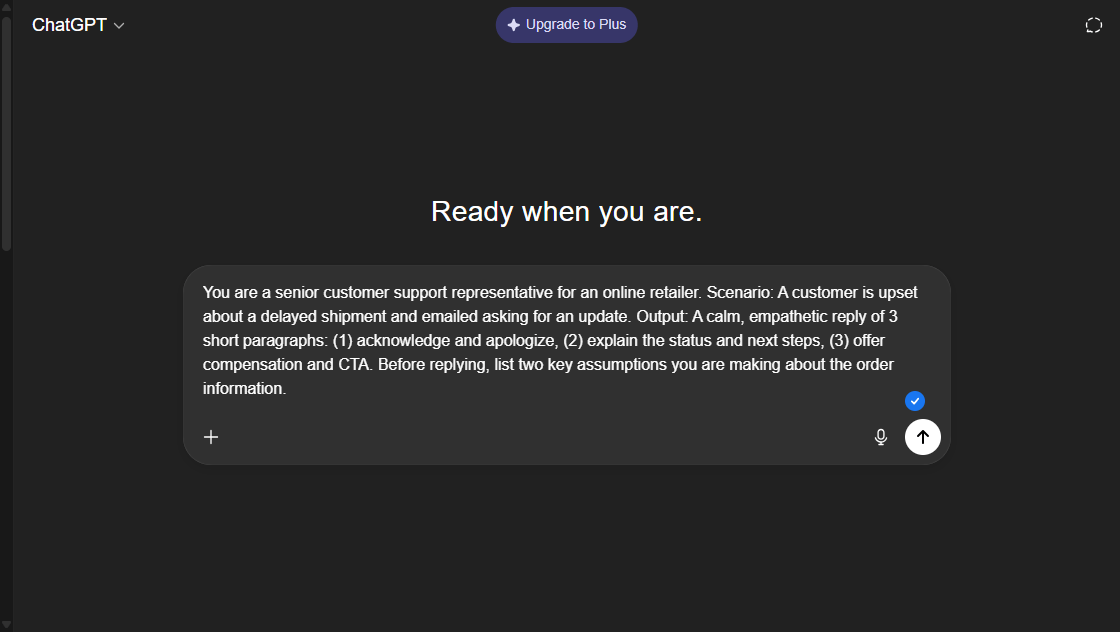

Customer support (calm explanation)

You are a senior customer support representative for an online retailer.

Scenario: A customer is upset about a delayed shipment and emailed asking for an update.

Output: A calm, empathetic reply of 3 short paragraphs: (1) acknowledge and apologize, (2) explain the status and next steps, (3) offer compensation and CTA.

Before replying, list two key assumptions you are making about the order information.

Product decision (concise briefing)

You are a product manager at a mid-stage SaaS startup.

Scenario: Prepare a 400-word briefing for the executive team summarizing the pros and cons of launching a freemium tier.

Output: Three headings (Benefits, Risks, Recommendation), each with a short paragraph, and one one-line suggested next step.

Start by listing the top three assumptions about user behavior.

Code generation (domain-aware)

You are a senior Python developer experienced in data validation.

Scenario: Write a function to validate international phone numbers without external libraries.

Output: Provide code in a single block, include one-line explanation of algorithmic choices, and a short unit test example.

Before the code, list any assumptions about input formats (e.g., strings, field separators).

Research summary (investor-friendly)

You are an analyst summarizing research for investors.

Scenario: Summarize recent trends in battery storage in under 300 words for a non-technical investment committee.

Output: Two short paragraphs (Current State, Investment Implications) and a 3-item bullet list of risks.

List two assumptions you made about market data sources.

Creative writing (specific voice)

You are a 1920s noir novelist.

Scenario: Write a 350-word opening scene set in a rain-soaked dockside bar that establishes mood and the protagonist’s attitude.

Output: One continuous scene paragraph with at least one line of dialogue.

Advanced Anchoring Patterns

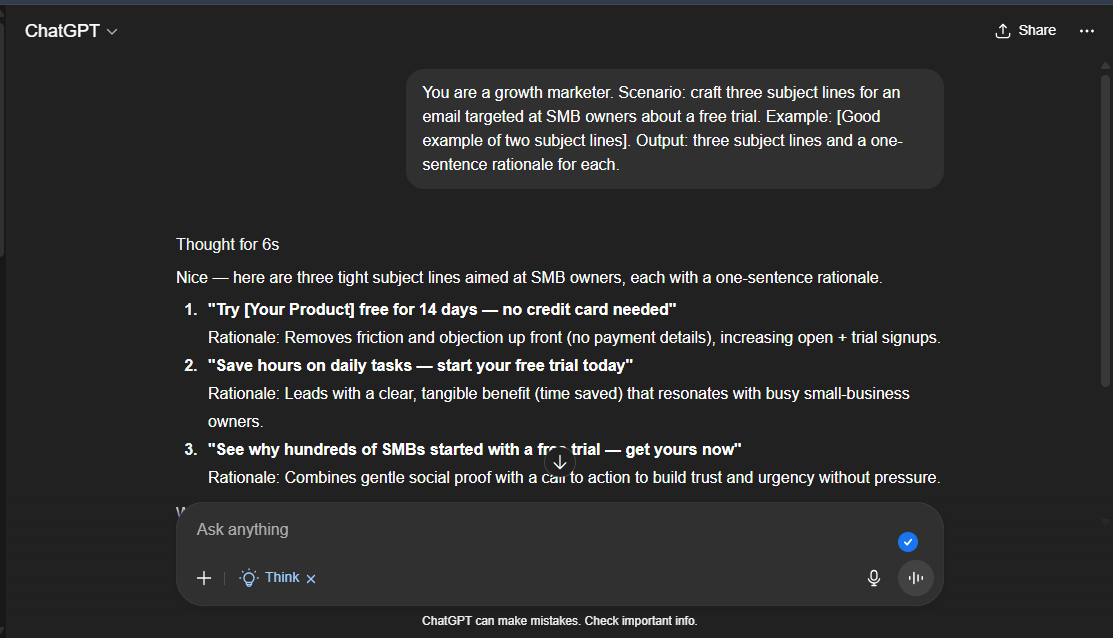

Layered Anchors

Combine role, scenario, and sample outputs plus a short example to further tighten expectations:

You are a growth marketer. Scenario: craft three subject lines for an email targeted at SMB owners about a free trial.

Example: [Good example of two subject lines].

Output: three subject lines and a one-sentence rationale for each.

Hybrid anchors with few-shot examples

Pairing a role + scenario with 1–3 examples (few-shot) gives the model both a frame and exemplars of the desired output. Use examples sparingly, they’re heavy anchors.

Two-stage anchoring

Stage 1: fast anchored summary for speed.

Stage 2: deeper anchored verification (assumptions, citations, and alternatives). Use stage 2 for audits or high-stakes outputs.

Testing Anchors: How to Measure Whether They Help or Hurt

Treat anchors like design features and measure them. Use three canonical tests.

- Neutral vs. Anchored (soundness check): Run the task with no anchor and with your anchor. Compare relevance, tone, and structure adherence.

- Counter-anchored (sensitivity test): Run the prompt under a different plausible role (e.g., “You are a junior analyst” vs. “You are a senior analyst”). Large swings show anchor sensitivity.

- Adversarial anchor (robustness test): Inject a misleading detail in the context and see if the model overfits to the irrelevant anchor. If it does, require assumption listing or explicit counterfactuals.

Track a few simple metrics: prompt adherence (format followed?), relevance (human-rated), hallucination rate (claims lacking support), and sensitivity index (variance across anchors). Log results in a small spreadsheet and version the anchor templates.

Common Failures and How to Fix Them

- Over-anchoring (tunnel vision): If outputs ignore viable alternatives, loosen the anchor or explicitly ask for alternatives.

- Hidden anchors (system/UI drift): Audit system messages, example prompts, and UI elements that the model may see. Surface them in documentation.

- False authority: Anchored outputs often read confidently. Force the model to list assumptions and confidence levels, and require human review for high-stakes use.

- Anchor decay in multi-turn flows: Re-state or re-attach the anchor in follow-up turns to keep the context stable.

Short fix examples:

- Add: “Now list three alternative viewpoints” to reduce tunnel vision.

- Add: “Assume the data below may be incomplete; flag missing fields” to avoid false authority.

Operationalizing Anchors in Products and Teams

- Prompt library: Store canonical anchor templates with version, owner, and test history. Make them discoverable to designers and engineers.

- Template injection: In product code, inject the canonical anchor as the system message that precedes user input. This keeps behavior consistent across sessions.

- Assumption logging: Record which anchor template was used with each model response for auditability.

- Release checks: Require sensitivity tests before any anchor goes into production.

- Anchor steward: Assign someone to own updates and incident responses for anchors used in customer-facing flows.

Ethics and Transparency

Anchors shape answers. When outputs influence people’s decisions, disclose the framing. If you present a model’s output as neutral or impartial, but you used a persona or viewpoint anchor, that should be visible to users. For regulated domains (medical, legal, financial), require:

- Auditable anchor usage records.

- Citations or evidence for claims.

- Explicit refusal policies when data is missing.

Being transparent about anchors reduces misuse and increases trust.

Practical Checklist Before You Push a Prompt Live

- Role defined?

- Scenario necessary and concise?

- Output format explicit and testable?

- Assumptions requested?

- Safety/verification steps for high-stakes outputs?

- Sensitivity tests run and logged?

If any item is “no,” refine the anchor.

The Right Question to Ask

Contextual anchoring is not a fancy trick; it’s a design pattern for predictable AI behavior. The better your anchor (clear role, precise scenario, and well-defined format) the less clean-up you’ll do after the model responds. But anchors are not neutral: they shape outcomes and hide assumptions. So ask not only “What anchor gets the best answer?” but also “How fragile is that anchor under realistic variation?”

“The AI will give you what you ask for, the trick is asking for the right thing in the right way.”

References for Further Reading

- Human bias in AI models? Anchoring effects and mitigation strategies in large language models.

-

Anchoring Bias in Large Language Models.

-

An Empirical Study of the Anchoring Effect in LLMs.

-

Cognitive bias in clinical large language models

Discover more from Aree Blog

Subscribe now to keep reading and get access to the full archive.