Quantum-enhanced machine learning is the name given to approaches that combine quantum computing with machine learning to handle certain tasks in new ways. At its simplest, imagine using tiny quantum processors as specialized pattern-recognition engines inside a larger, classical workflow.

Today those engines are small and noisy, but they already offer fresh perspectives on feature representation, similarity measures, and hybrid model design.

What is Quantum-Enhanced Machine Learning?

The phrase describes methods where quantum circuits contribute something a classical system cannot, or can represent much more efficiently for a particular problem.

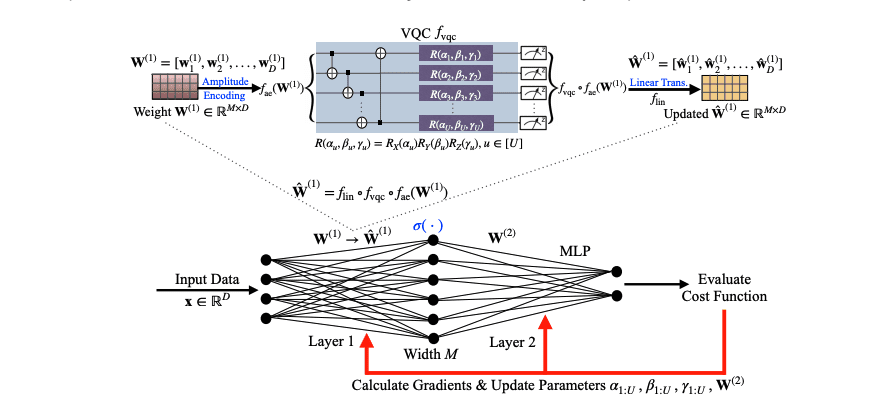

Two practical patterns dominate current work. In one, data are encoded into quantum states and a quantum circuit computes overlaps or similarities that become kernel values for classical algorithms. In the other, a parameterized quantum circuit acts like a trainable layer inside a hybrid model; classical optimizers tune the circuit’s parameters against a loss function.

Both approaches aim to exploit the exponentially large vector space of quantum states to create representations that can separate or describe structure that is costly to produce classically.

These ideas are not guaranteed to outcompete classical methods across the board; rather, they open specific trade-offs.

For carefully structured data and for problems where an expressive quantum feature map closely matches the problem’s geometry, experiments have shown measurable improvements in accuracy or compactness of the model. For general-purpose tasks on large, noisy datasets, classical methods remain strong and in many cases preferable.

How quantum-Enhanced Machine Learning Works in Practice

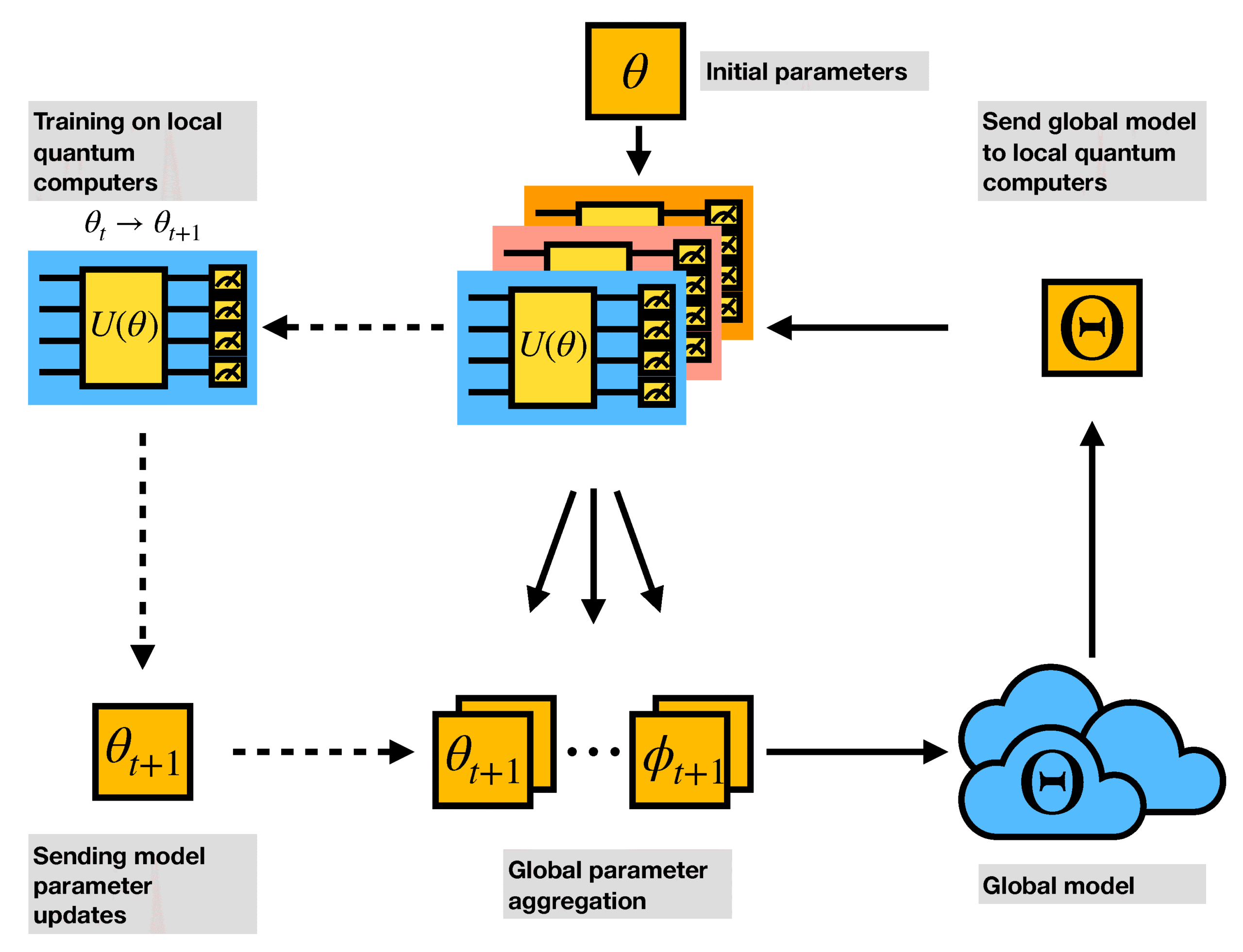

A working hybrid pipeline today often looks like this: preprocess data classically, map selected features into a quantum circuit that prepares a state, measure the circuit to obtain a similarity or feature vector, and feed that output into a classical classifier or optimizer.

The quantum stage can be short (tens to hundreds of gates) and is repeated many times to estimate the quantities needed for training.

Three practical considerations shape results:

- Encoding strategy. How you map numbers to quantum amplitudes or phases strongly changes a model’s inductive bias. The same data with two encodings can lead to very different performance.

- Noise and repetition. Current quantum hardware is noisy; obtaining precise estimates requires many circuit runs. That increases runtime and cost.

- Classical overhead. Hybrid training often relies on classical optimizers that evaluate gradients via repeated quantum circuit executions. The classical-quantum looping can be a bottleneck unless the quantum contribution justifies it.

Tooling has matured enough that researchers can prototype without deep hardware expertise.

Libraries such as TensorFlow Quantum, Qiskit, and PennyLane let you build circuits, simulate their behavior, and connect quantum layers to familiar machine-learning frameworks. Use them to test small-scale hypotheses before investing in cloud quantum time.

Evidence and Responsible Evaluation

When deciding whether to apply quantum-enhanced machine learning to a problem, treat claims the same way you would new techniques in classical ML: examine tasks, datasets, baselines, and reproducibility.

Laboratory results that show an advantage tend to share patterns: the datasets are structured or small enough that a carefully designed quantum representation can capture relationships compactly; baselines are strong but specialized to different inductive biases; and authors provide code or circuit descriptions that let others reproduce the setup.

Conversely, sensational claims that a quantum model “beats deep learning across the board” almost always collapse under careful, repeatable comparison.

A pragmatic checklist you can use when reading papers or vendor claims:

- Are experiment details available (circuit diagrams, encoding method, number of shots)?

- Did the authors compare against well-tuned classical baselines on the same input representation?

- Are results robust across random seeds and multiple datasets, or narrowly tuned to one benchmark?

- Was hardware noise modeled or characterized in the experiment?

Scholarly outlets and preprint repositories are full of relevant work; peer-reviewed journals have rigorous review standards, while preprints offer rapid dissemination. Search for replication studies and follow-up analyses before assuming superiority for production use.

Real Applications and Where Gains have Appeared

Two application areas keep returning in credible research.

First, classification tasks with complex structure (such as certain types of biological signals or materials descriptors) where classical feature engineering struggles: quantum feature maps can sometimes provide a representation that makes classes more separable with simpler classifiers.

Second, kernel estimation for problems where computing a high-quality kernel classically would be expensive; a quantum device can estimate inner products in a quantum feature space directly.

Beyond those, experiments explore generative modeling, anomaly detection, and optimization subroutines where quantum subroutines replace bottlenecks in classical pipelines. But the takeaway for practitioners is practical: pick a narrow task, design an encoding that reflects domain knowledge, and compare thoroughly against classical alternatives.

Limitations You Should Plan For

It’s important to plan with constraints in mind. Current devices are limited in qubit count and coherence time; error correction at scale is not yet available. That means circuit depth and the number of qubits you can rely on are small, and experiments often require many repetitions to get stable estimates.

Data-loading is also a bottleneck, efficiently moving large classical datasets into quantum states remains an unsolved systems problem for many workflows.

From a project-management view, treat quantum components as experimental modules. Expect several rounds of iteration on the encoding and circuit design before seeing promising results, and budget classical baselines and ablations as part of the research cost.

References for Further Reading

- arXiv — TensorFlow Quantum: A Software Framework for Quantum Machine Learning

- Nature — Application of quantum machine learning using quantum kernel algorithms on multiclass neuron M-type classification

Discover more from Aree Blog

Subscribe now to keep reading and get access to the full archive.